Wikipedia, "Stochastic Gradient Descent." (Feb 28, 2011). differentiable or subdifferentiable).

Friedman, The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed., New York: Springer, 2001. Stochastic gradient descent (often abbreviated SGD) is an iterative method for optimizing an objective function with suitable smoothness properties (e.g. Repeat steps 2 and 3 until some convergence criterion is met. Produce a random permutation of the data points.Ĥ. Choose a learning parameter, an initial estimate of the parameters. The amount of travel in the direction of the point gradient is specified by the learning parameter of the algorithm.

STOCHASTIC GRADIENT DESCENT UPDATE

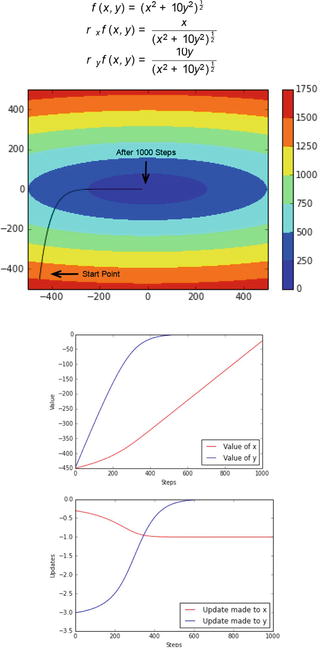

Stochastic gradient descent uses each data point to iteratively update the estimated parameters of the algorithm by traversing the likelihood surface in the direction of the negative gradient of the likelihood of each point. Stochastic Gradient Descent (SGD) is a simple gradient-based optimization algorithm used in machine learning and deep learning for training artificial. Maximum-likelihood estimation uses the joint-likelihood function of all the data points to learn the parameters of the regression line. Given a sample data point, the likelihood of the parameters is specified as. The errors associated with each data point are assumed to be independent and normally distributed with variance. Run stochastic gradient descent in order to optimize the induced loss function given a model and data. Since you only need to hold one training example, they are easier to store in memory. The parameters of the function are the slope and the intercept. The main differences between the two are: Batch gradient descent computes the gradient of the cost function with respect to the model parameters using the entire training dataset in each iteration. Stochastic gradient descent Stochastic gradient descent (SGD) runs a training epoch for each example within the dataset and it updates each training example's parameters one at a time. Tremendous advances in large scale machine learning and deep learning have been powered by the seemingly simple and lightweight stochastic gradient method. In this exercise youll train a neural network on the Fuel Economy dataset and then explore the effect of the learning rate and batch size on SGD. As a byproduct, we also slightly refine the existing studies on the uniform convergence of gradients by showing its connection to Rademacher chaos complexities.Consider a simple regression function. We show that the complexity of SGD iterates grows in a controllable manner with respect to the iteration number, which sheds insights on how an implicit regularization can be achieved by tuning the number of passes to balance the computational and statistical errors.

Apply the technique to other regression problems on the UCI machine learning repository. In this paper, we develop novel learning rates of SGD for nonconvex learning by presenting high-probability bounds for both computational and statistical errors. Change the stochastic gradient descent algorithm to accumulate updates across each epoch and only update the coefficients in a batch at the end of the epoch. However, there is a lacking study to jointly consider the computational and statistical properties in a nonconvex learning setting. If gradient descent is used, the computational cost for each independent variable iteration is O ( n ), which grows linearly with n. Let kkand kk be dual norms (e.g. In this variant, only one random training example is used to calculate the gradient and update the parameters at each iteration. Computational and statistical properties are separately studied to understand the behavior of SGD in the literature. Stochastic Gradient Descent (SGD) is a variant of the Gradient Descent algorithm used for optimizing machine learning models.

In gradient descent, all data points are used to obtain new parameters of a model at each step. Stochastic gradient descent (SGD) has become the method of choice for training highly complex and nonconvex models since it can not only recover good solutions to minimize training errors but also generalize well. Stochastic gradient descent is a variant of gradient descent.

0 kommentar(er)

0 kommentar(er)